Natural Language Processing: What Is It And Why Does It Matter

The ability to process and generate human languages gives any computer the power to be more than just a machine – because it breaks down barriers, simplifies human-computer interactions, offers numerous opportunities for new sets of computing systems, and boosts productivity.

This blog post explores natural language processing to understand how it might be of use to you and your business.

What is Natural Language Processing?

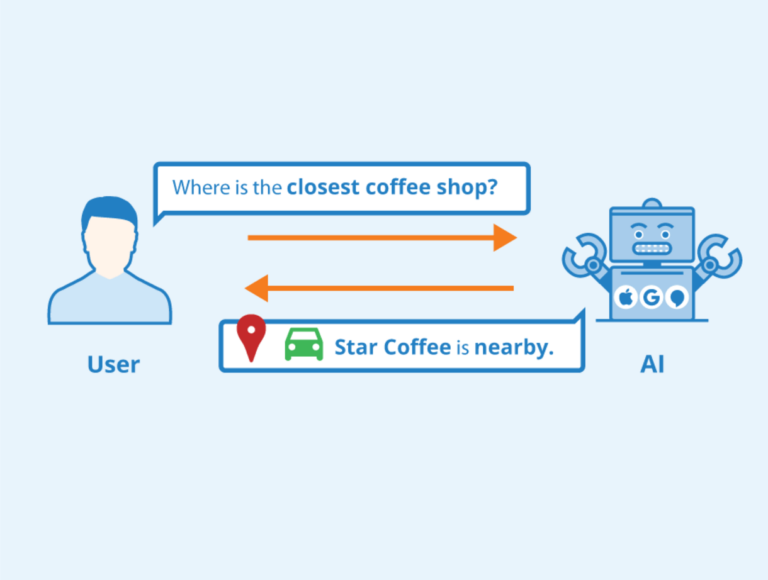

Natural Language Processing, also called NLP, is a sub-field of computer science and linguistics. It aims to provide computers with the ability to understand, interpret, and generate human languages.

Language lies at the core of human interactions and NLP is the bridge that connects humans to computers in the most natural way, including through text, speech, and even sign language.

Natural language processing dates back to the early 1950s, with the Georgetown-IBM experiment in 1954 that automatically translated over 60 Russian sentences into English. Developments continued through the later part of the century but most of those systems employed hand-written rules.

From the late 1980s, however, Statistical NLP was born from the ever-increasing and cheaper processing power. It employed statistical models and machine learning techniques such as parallel corpus to discover patterns, relationships, and probabilities from large datasets. By the early 2000s, however, neural networks had become the preferred machine methods for their much better performance.

Today, different types of neural networks are used for natural language processing. They include:

- Transformer models

- BERT (Bidirectional Encoder Representations from Transformers)

- CNN (Convolutional Neural Networks)

- RNNs (Recurrent Neural Networks)

- LSTMs (Long Short-Term Memory) networks.

The models apply various tasks and sub-tasks to the input data to produce required outputs such as text generation, language understanding, speech recognition, translation, and so on.

Why Does NLP Matter?

The applications of NLP are vast and continue to evolve. This makes it an important technology for many industries and uses. Here are a few examples:

- Machine Translation: NLP is being applied to translate from one language to another with amazing precision and grammatical integrity.

- Virtual Assistants: From providing customer service to answering a host of questions, offering companionship, and running tasks through voice commands, NLP is helping to boost workers’ productivity and enhance the quality of life for many.

- Text Analysis & Summaries: NLP makes it easier to extract key information from large documents at impressive speed. It helps to summarize documents, texts, emails, or web pages faster than any human can.

- Sentiment analysis: By understanding the emotions and opinions expressed in a text or document, businesses can extract valuable information for market research, social media monitoring, and future marketing campaigns.

How Natural Language Processing Works

Natural language processing focuses on enabling computers to understand and interpret human language by combining the power of linguistics and computer science using various techniques that can vary from rules-based approaches that rely on predefined rules, to statistical models that learn their patterns from labeled training data, and the more modern deep learning models that employ neural networks to identify and categorize even more complex patterns from text.

While different systems will vary in their implementations of NLP, a general process involving different steps is as follows:

- Text Preprocessing: This is the initial stage before all other work can begin. First, the body of text is broken down into individual words or smaller units like phrases called tokens. This process itself is called tokenization and it helps in effective organizing and processing. Other preprocessing tasks include lowercasing, where all the text is converted to lowercase letters for uniformity and the removal of stopwords which contribute little to meaning.

- Part-of-Speech Tagging: This step involves the assigning of grammatical tags to each of the tokens derived in step 1 above. Grammatical tags include nouns, verbs, adjectives, and adverbs. This step helps in understanding the input text’s syntactic structure.

- Named Entity Recognition (NER): A named entity includes stuff like the names of people or a place, the address of an organization, the model of a car, and so on. This step involves the identification and categorization of the named entities in the text. The goal here is to extract possibly important information that will help to better understand the text.

- Parsing and Syntax Analysis: Here, you analyze the grammatical structure of sentences inside the text to try and understand the relationships between words and phrases. The goal of this step is to understand the meaning and context of the text.

- Sentiment Analysis: With sentiment analysis, you are looking to grasp the idea(s) expressed in the text. Sentiments can be positive, negative, or neutral and help paint a better picture of the overall attitude or opinions toward a particular topic.

- Language Modeling: This process involves building statistical or machine learning models that capture the patterns and relationships in language data. These models enable tasks such as language generation, machine translation, or text summarization.

- Output Generation: The final part is the generation of an output to the user. This is necessary for tasks such as language translation and text summarization.

More Natural Language Processing Tasks

Aside from the process steps listed above, many other tasks are frequently employed in natural language processing to attain desired results. Here are some of the more popular ones.

- OCR: OCR stands for Optical Character Recognition, and it is a technology that is used to transform pictures into digital data. For instance, when you need to scan an invoice or receipt to extract the figures in it and save it in your company’s database, you will use a software program with OCR capability. However, the OCR technology has its limits, such as with word accuracy, context, and semantic understanding. But with the addition of NLP, OCR programs can produce better output with more contextual understanding, actionable insights, improved accuracy, and categorizations.

- Speech Recognition: From digital transcription services to voice assistants and voice-activated devices, the uses of speech recognition are many. However, simple recognition of audio speech isn’t much use without the added information from context and sentiment analysis. NLP further makes speech recognition technology very useful by providing a text output from audio inputs that can further be fed into other machines for more productivity.

- Text-to-Speech: The transformation of written text to audible speech, often used to give chatbots and virtual assistants a human-like audible voice. Though the initial implementations had monotonous voices, more modern text-to-speech systems such as Elevenlabs have gotten so good that you can barely differentiate their outputs from an original voice.

- Natural Language Understanding: This is the process of making reasonable sense of any dataset. Natural language understanding involves any task that can improve comprehension and interpretation of the text, from named entity recognition to syntax and grammar analysis, semantic analysis, and different machine learning algorithms.

- Natural Language Generation: One of the most widely known tasks. Here, data is turned into words that any human can understand by either telling a story or explaining stuff. This is what chatbots use to generate interesting conversations. Another type of natural language generation is text-to-text generation, where one input text is transformed into a totally different text. This method is found in summarizations, translations, and rephrasing bots.

- Named Entity Recognition: NER or Named Entity Recognition is an information extraction sub-task that involves the identification and classification of items or entities into previously defined categories. Hence, NER helps the machine recognize specific entities, such as a person, car, or place from a text or document, thereby improving the extraction of meaningful information.

- Sentiment Analysis: This is another sub-field of natural language processing that tries to extract and understand emotions and personal opinions from text data. This capability enables machines to better navigate the complexity of human communication by gauging sentiments such as sarcasm, cultural differences, and positive, negative, and neutral sentiments. Businesses employ it for market research, brand monitoring, customer support, and social media analysis.

- Toxicity Classification: When you post hate speech on a forum or social media and the moderator bot automatically flags it, then you’ve been caught by a toxicity classification AI model. These systems are trained with machine learning and various algorithms using NLP to automatically identify and classify harmful content, such as insults, threats, and hate speech in text data.

- Summarization: NLP makes it possible for AI models to quickly read large amounts of information that would have taken a human a lot more time. Then identify the most important parts of that text and present it in a coherent form. This saves a user time and effort, boosts understanding, and improves decision-making.

- Stemming: A preprocessing method of reducing words to their root base. Helps in creating a better understanding of the text.

Real-World NLP Applications

Here is a list of different real-world applications of natural language processing and related technologies.

- Chatbots like ChatGPT.

- Translators such as English to German or Russian to French AI translators.

- Virtual assistants like Apple’s Siri, Amazon’s Alexa, and OpenAI’s ChatGPT.

- Auto-correct systems like Grammarly.

- Search engines like You.com.

- Text summarization like you can get from ChatGPT.

Challenges In NLP

While natural language processing has made significant advances in many areas, there are still issues facing the technology. Here are some of the major ones:

- Ambiguity & Context: Human languages are complex and inherently ambiguous. So, it remains an uphill task for machines to completely grasp human communication in all situations.

- Data & Model Bias: AI systems are often biased, based on the data they were trained on. So, no matter how good a model is, there is always some bias, which creates ethical concerns.

- Lack of Reason: Machines also do not have the common sense and reasoning that comes naturally to humans, and implementing them in a system can equally be a tough task.

Resources For Learning NLP

- Stanford NLP Group: https://nlp.stanford.edu/

- Coursera: https://www.coursera.org/

- DeepLearning.AI: https://www.deeplearning.ai/resources/natural-language-processing/

- Fast Data Science: https://fastdatascience.com/guide-natural-language-processing-nlp/

- Kaggle: https://www.kaggle.com/

- Fast Data Science: https://fastdatascience.com/guide-natural-language-processing-nlp/

- Natural Language Toolkit: https://www.nltk.org/

- Hugging Face: https://huggingface.co/

- Wikipedia: https://en.m.wikipedia.org/wiki/Natural_language_processing

- Machine Learning Mastery: https://machinelearningmastery.com/

- Awesome NLP: https://github.com/keon/awesome-nlp

- Amazon Comprehend: https://aws.amazon.com/comprehend/

- Google Cloud Natural Language: https://cloud.google.com/natural-language

- SpaCy: https://spacy.io/

Conclusion

Natural language processing is a fascinating field of artificial intelligence that is enabling machines to do stuff that was unthinkable decades ago. This technology has expanded the realm of computer applications and is creating new markets.

You have seen the many different capabilities, real-world applications, and available tools to help you get started with NLP. However, it’s up to you to find ways to leverage them in developing intelligent systems that will unlock your potential and that of your business.