Edge computing: What is it? Benefits, Risks & more

What is edge computing, and how can your company benefit from it? We answer all your questions here.

Edge computing is a framework for distributed computing that brings the computing and data storage capabilities of enterprise software closer to the users or data sources.

The goal of edge computing is to reduce latency between a user and a server, as well as minimize an application’s bandwidth usage. It is estimated that 175 Zettabytes of data will be generated worldwide by 2025, with edge devices creating more than half of the total.

Edge computing is not a standardized technology, rather it is an architectural approach, a method to further optimize data center and cloud systems.

This post looks at edge computing, what it means for the Internet in general, for infrastructure, and the development of future applications.

A Short History

The early internet was simple – you set up a server and visitors came by. But as the web grew and included heavy media content like videos, servers started to get overloaded, bandwidths got clogged, and latency ballooned.

To solve the problem, content delivery networks evolved to provide an ingenious, yet practical solution. You could maintain your website as it was, but outsource the delivery of heavy data like videos. Content delivery networks are present in multiple locations, making it easier and cheaper for even smaller firms to optimize their website experience.

If a user visits such an optimized website, the company server will present the web page as normal. However, if the page contains heavy data, its location would simply be included in the web page’s code, so the page can load the heavy data directly from that location.

The result is that everything loads faster because the content delivery network from which the browser is loading the video is closer to the user than the company’s original server.

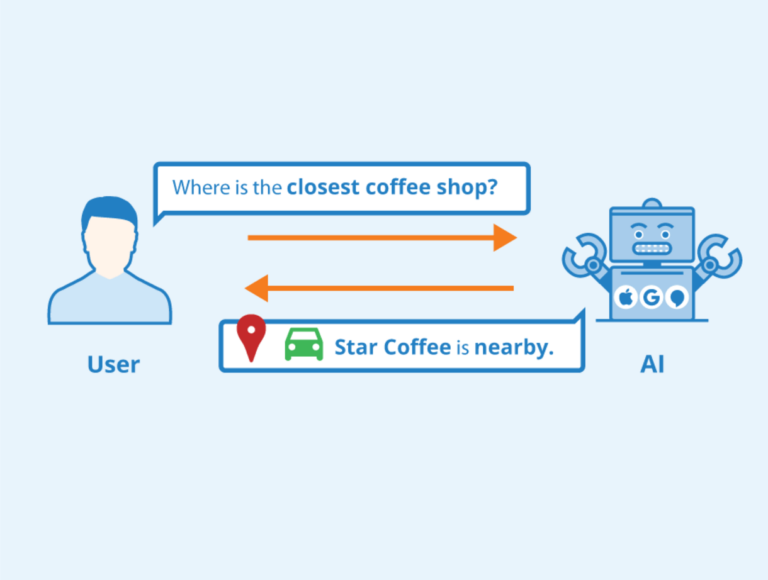

Edge computing is a further evolution of this method. Here, it is the computation of time-critical or latency-intolerant tasks that is brought closer to the user, as Internet users and connected devices produce more data than ever before.

How Edge Computing Works

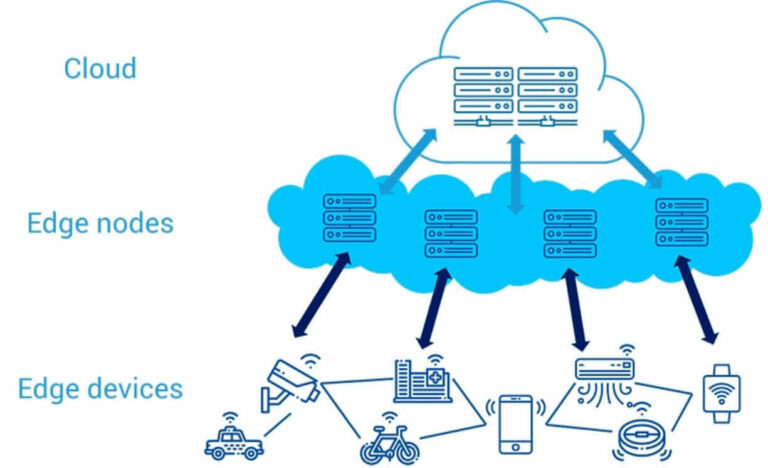

Edge computing is a layer between an Internet user and either a website’s data center or the cloud. The edge is any computing infrastructure closer to the user than a cloud data center. Enterprise applications are automatically deployed, updated, and terminated on the edge.

As a technology, edge computing has no standard, which means that different companies implement it in their unique ways. The following features are, however, necessary to implement edge computing.

- Cloud – Your core computing system. Could be a service like AWS, Google Cloud, or your very own private cloud.

- Edge Nodes – This is any hardware system that can execute code and connect back to the cloud data center through the Internet.

- Management Platform – A software application or operating system to manage software deployment and administration across all edge nodes and the cloud.

- Automation – Load balancing between your cloud and edge nodes. A system that deploys and terminates the right application on the right edge node when needed and without human input. Containerization approaches like Kubernetes are winners here.

There are two ways to implement edge computing:

- Cloud Services – Major cloud computing platforms from Google Cloud to AWS and Azure all have integrated services that make it easy to implement edge computing.

- DIY Architecture – Here, you will have to set up your hardware and software server systems by yourself. Red Hat offers a hybrid cloud operating system called OpenShift and a lighter-weight version called Device Edge that is ideal for creating edge nodes.

The Benefits of Edge Computing

The ability to host your applications on the edge comes with many benefits for a wide range of applications. These benefits include:

- Latency Reduction – Edge computing nodes being closer to edge devices means less latency (the time needed for data to travel from a device to the server and back).

- Improved Operational Efficiency – By taking advantage of lower latencies at the edge and combining it with the maximum compute resources of the cloud, system efficiencies can be maximized.

- Improved Reliability – Systems that use both a core cloud and edge nodes are more resilient and reliable as a result.

- Lower Bandwidth Costs – Not having to send so much data across the Internet to a company’s cloud data center can mean significant cost savings depending on the application.

- Regulatory Compliance – The ability to have users’ data processed in the user’s locality can help companies comply with data privacy regulations.

Challenges & Risks

Edge computing also comes with its challenges and the major ones include:

- Larger Attack Surface – A system that is spread out across multiple nodes creates a larger surface for potential attacks. This can range from infrastructure security to user privacy issues.

- Increased Security Challenges – Maintaining infrastructure in multiple locations poses more security challenges than having to physically secure a single data center, for instance.

- Limited Compute Capacity – The typical edge infrastructure offers way fewer computational capabilities than a cloud environment, making the cloud the number #1 option for strictly compute-intensive applications.

Edge Computing Vs Cloud Computing

The edge is part of the cloud. It is a similar service that is physically closer to the user, an extension of the cloud that improves computational efficiency by handling time-critical tasks.

You cannot have an edge architecture without first having a cloud. In addition, the cloud has its advantages over the edge, once time and latency issues are not critical.

Edge Computing Vs Artificial Intelligence

Some artificial intelligence applications such as security and ID systems can benefit immensely from low-latency networks. Edge computing makes it possible to run machine learning models directly close to the edge devices that are generating the data.

The advantages include faster response times, lower bandwidth consumption, and improved security. Edge computing and AI are complementary technologies that will probably continue to strengthen each other.

Edge Computing Vs 5G Networks

5G networks promise higher speeds and lower latency, but an average round-trip from a user to a cloud data center and back to the user takes 100-300ms. This means that 5G networks can only offer higher speeds on their own, but not lower latencies.

To slash latency times, 5G networks need edge-computing integration to achieve the 10-20ms latency that would make them shine. Only at these lower latencies will autonomous vehicles, industrial machines, and many other real-world applications become viable.

Popular Edge Devices

Edge devices collect and process data locally while interacting with their physical environments or performing other useful functions.

The following are popular device types that can benefit from an edge-computing architecture:

- Smart speakers

- Smartphones

- Robots

- Smartwatches

- Internet of Things (IoT) devices

- Autonomous vehicles

- Point of sales (POS) systems

Edge Computing Applications

A wide range of industries can benefit from the integration of edge computing. Here are some:

- Smart grids for efficient electric power generation and distribution.

- Smart homes that cater to their occupants’ needs.

- Smart cities with infrastructure monitoring, transportation, and security applications.

- Modern agriculture systems with IoT sensors and climate control to boost yields.

- Traffic management in cities.

- Autonomous navigation services for drones, cars, and military applications.

- Remote asset monitoring, such as oil and gas installations.

- Manufacturing plants that integrate industry 4.0 principles.

- Retail stores and product warehouse management.

- Patient monitoring systems for hospitals.

- Predictive maintenance of high-tech products such as engines.

- Speech and audio processing applications.

- Machine learning applications.

- Virtual and augmented reality systems.

- Improved security applications.

- Streaming and content delivery services.

Infrastructure & Service Providers

Depending on which way you want to approach edge computing, there are different service providers out there with unique solutions. Here are the top companies.

- KubeEdge – Open source containerization solution for edge devices.

- Red Hat OpenShift – Distributed cloud operating system.

- Alef Private Edge – Plug-and-play edge-as-a-service offering.

- Azure IoT Edge – IoT Edge from Microsoft.

- Google Cloud – Fully managed cloud and edge computing as-a-service.

- ClearBlade – Edge computing software solution.

Frequently Asked Questions (FAQs)

Is 5G possible without edge computing?

Yes, it is. But 5G without edge computing wouldn’t be as fast.

Does edge computing differ from fog computing?

Yes, edge computing happens at the network’s edge, while fog computing is any computing between the edge and the cloud.

Will Edge computing replace cloud computing?

No, it will not. Edge computing is a part of cloud computing.

How does edge computing reduce latency?

Edge computing reduces latency because there is a shorter distance for the data to travel.

How does edge computing benefit IoT?

Edge computing benefits IoT devices by bringing compute capabilities closer to the IoT device, which helps optimize bandwidth and real-time processing.

Conclusion

Reaching the end of this exploration of edge computing and its many benefits and applications, it should be clear that the practice is here to stay and can only continue to grow.

Although different industries might have different needs, it is probably in your organization’s best interest to find ways of leveraging edge computing before your competition does.